As usual it’s taken us longer than we would like. The usual problem of finding planning and recording slots we can both make applies. But I think the episode turned out well. It was certainly fun to make.

So here are the show notes.

Episode 35 “In Search Of EXCELence?” long show notes

This episode title is about our Topics Topic.

Since our last episode, Marna was in Kansas City for SHARE, and in Germany for Zeit fur Z. Martin has been to South Africa for a customer.

Take note about the z/OS 3.1 functional dependency moving from Semeru 11 to Semeru 17 before Novemeber 2025.

Manual of the Moment: MVS Extended Addressability Guide

- All about 64 bit, data spaces, hiperspaces, cross memory.

- Well written, good introductions and then some.

Mainframe – Looking at ages of fixes you haven’t installed yet

- This is a tieback to our Episode 34, when we talked about UUID.

- The UUID for z/OSMF Software Management is the ability to know for certain, when used according to the rules, what SMP/E CSI represents your active system.

- This episode’s topic is still in the vein of knowing insights on the service level of your system: How long has an IBM PTF been available?

- Kind of related to Recommended Service Update (RSU), as RSU marking is a set of rules for how a PTF ages before it get recommended.

- But this discussion will be specifically on being able to know about what date that IBM PTF was available for you to install

- There are other vendors which make their PTF availability date easily discernable, but now IBM has done that too.

- How to know when the IBM PTF was available:

- IBM has started adding a

REWORKdate to PTFs. The format is yyyyddd, Julian date. - Take note, though, that actual

REWORKdate put on the PTF may be a day or two before the actual date it was made available, but usually that difference of a day or two isn’t important. - Marna looked at a considerable sample size and looked at the actual Closed Dates of PTFs, and the

REWORKdate, and most are one day different. - A use case where PTF Close Date can help:

- Some enterprises have a policy that Security/Integrity (SECINT) PTFs that meet some criteria must be installed within 90 days of availability.

- So that’s where the “availability” value comes in.

- It certainly isn’t hard to know, if you’ve RECEIVEd PTFs, when they closed. Add the SECINT SOURCEID to know which PTFs are SECINT.

- Useful reminder that the SECINT SOURCEID marking is only available to those that have access to the IBM Z and LinuxOne Security Portal.

- Some enterprises have a policy that Security/Integrity (SECINT) PTFs that meet some criteria must be installed within 90 days of availability.

- Combine the two pieces of information to know how long a security integrity fix has been available.

- That way you can see how well you’re doing against your 90 day policy.

- Also it can give you your action list with a deadline.

- Also a great time to remind folks that using an automated RECEIVE ORDER for PTFs, gets you all the PTFs that are applicable to your system. And that means the REWORK date is available at your fingertips right away.

- If you do not automate RECEIVE ORDER, then you are left with a rather long way to manually retrieve them, likely from Shopz.

- How about viewing rework dates on PTFs that are already installed?

- We now know the date a PTF was available, and you’ve always known the date and time the PTF was APPLYed.

- So, you could gather a nice bit a data about how long fixes are available before they are installed in a lovely graph.

- For another view of the data, customers roll fixes across their systems. And, you could even do comparisons between systems to see ages of fixes as they roll across your enterprise.

- Don’t forgetto do those comparisons between systems as they are deployed, that UUID comes in very handy.

- Another interesting side effects of knowing the date an IBM PTF was available:

- The RSU designation. Now you can see how long it took that PTF to become recommended, if such a thing floats your boat.

- Another example, is looking at New Function PTFs, which are likely have a HOLD for ENHancement.

- You could do spiffy things like notice how long a New Function PTF has aged before becoming Recommended.

- IBM has started adding a

- Where to get the REWORK date from:

- As you would expect you can see the REWORK date within queries today (for instance when you do an SMP/E CSI query of the LIST command).

- Although you might not see it in all the z/OSMF Software Management and Software Update locations just yet, we are aware that would be another piece where it should be surfaced.

- The possibilities of knowing more insights just got a lot bigger, now we have this piece of data. Using it in conjunction with the UUID makes it even more powerful.

- Customers can make better decisions and get more info on how they’re carrying them out.

Performance – Drawers, Of Course

- This topic is a synopsis of Martin’s 2024 new presentation. This discussion is about z16 primarily.

- Definition of a drawer:

- A bunch of processing unit (PU) chips

- Memory

- Connectors

- ICA-SR

- To other drawers

- To I/O drawers

- Buy 1 to 4 drawers in z16 A01.

- 4 drawer models Factory Build Only

- Drawers and frames definition:

- Frames are 19” racks

- 0-3 PU drawers in a drawer

- In the A and B frames

- z16 cache Hierarchy

- PU Chip definition:

- 8 cores

- Each has its own L1 cache

- Each has its own L2 cache

- Shared as virtual L3 cache

- Fair proportion remains as the core’s L2

- Shared as virtual L3 cache

- L2 and L3 same distance from the owner

- Part of a Dual Chip Module (DCM)

- PU Chip definition:

- DCM definition:

- 2 PU chips

- Coupled by M bus

- Connected to other 3 DCMs in drawer by X Bus

- Virtual Level 4 cacheacross the drawer

- Drawers inter-connected by A Bus

- Much further away

- Remote L4 cache as well as memory

- LPARs should fit into drawers

- All of an LPAR’s logical processors and memory should be in the same drawer

- Important because cross-drawer memory and cache accesses are expensive

- Often shows up as bad Cycles per Instruction (CPI)

- This is the reason why, though z/OS V2.5 and higher can support 16TB of memory, you really shouldn’t go above 10TB in a single LPAR.

- Processor drawer growth…

- … with each processor generation

- Higher max core count per drawer

- Each core faster

- Max memory increased per drawer most generations

- Most customers have rather less than the maximum memory per drawer

- … with each processor generation

- However, z/OS workloads are growing fast

- Linux also

- Also Coupling Facilities, especially with z16

- So it’s a race against time

- Resolution often splitting LPARs

- Can have other benefits

- This is not quick

- Refer to Episode 17 “Two Good, Four Better” March 2020

- Resolution often splitting LPARs

- Drawers and LPAR placement

- z/OS and ICF and IFL LPARs are separated by drawer

- Until they aren’t

- z/OS LPARs start in the first drawer and upwards

- ICFs and IFLs in the last drawer downwards

- Collisions are possible!

- More drawers gives more choices for LPAR placement

- And reduces the chances of LPAR types colliding

- PR/SM makes the decision on where LPARs are.

- For both CPU and memory

- However a sensible LPAR design can help influence the PR/SM decision.

- z/OS and ICF and IFL LPARs are separated by drawer

- Drawers and resilience

- Drawer failure is a rare event, but you have to design for it

- More drawers gives more chance of surviving drawers coping with the workload

- If planned for, LPARs can move to surviving drawers

- Drawers and sustainability

- Each drawer has an energy footprint

- Larger than a core

- Improved in each generation

- Depends also on eg memory configuration

- Each drawer has an energy footprint

- Drawers and frames

- Frames might limit the number of processor drawers

- Frames sometimes limited by floor space considerations

- Depends also on I/O configuration

- Frames might limit the number of processor drawers

- Instrumentation in SMF records

- SMF 70

- Reporting In Partition Data Report

- CPU Data Section

- HiperDispatch Parking

- Logical Partition Data Section

- Describes LPAR-level characteristics

- Logical Processor Data Section

- Core-level utilisation statistics

- Polar weights and polarities

- z16 adds core home addresses

- Online time

- Logical Core Data Section

- Relates threads to cores

- Only for the record-cutting z/OS system

- Allows Parking analysis

- SMF 113

- Effects of LPAR design

- Sourcing of data in the cache hierarchy

- Points out remote accesses

- Including cross-drawer

- Cycles Per Instruction (CPI)

- One of the acid tests of LPAR design

- Record cut by z/OS

- Granularity is logical processor in an interval

- Can’t see inside other LPARs

- Effects of LPAR design

- SMF 99-14

- Largely obsoleted by SMF 70-1

- Uniquely has Affinity Nodes

- Also has home addresses

- Only for the record-cutting system

- Obviously only for z/OS systems

- So no ICF, IFL, Physical

- Supports machines prior to z16

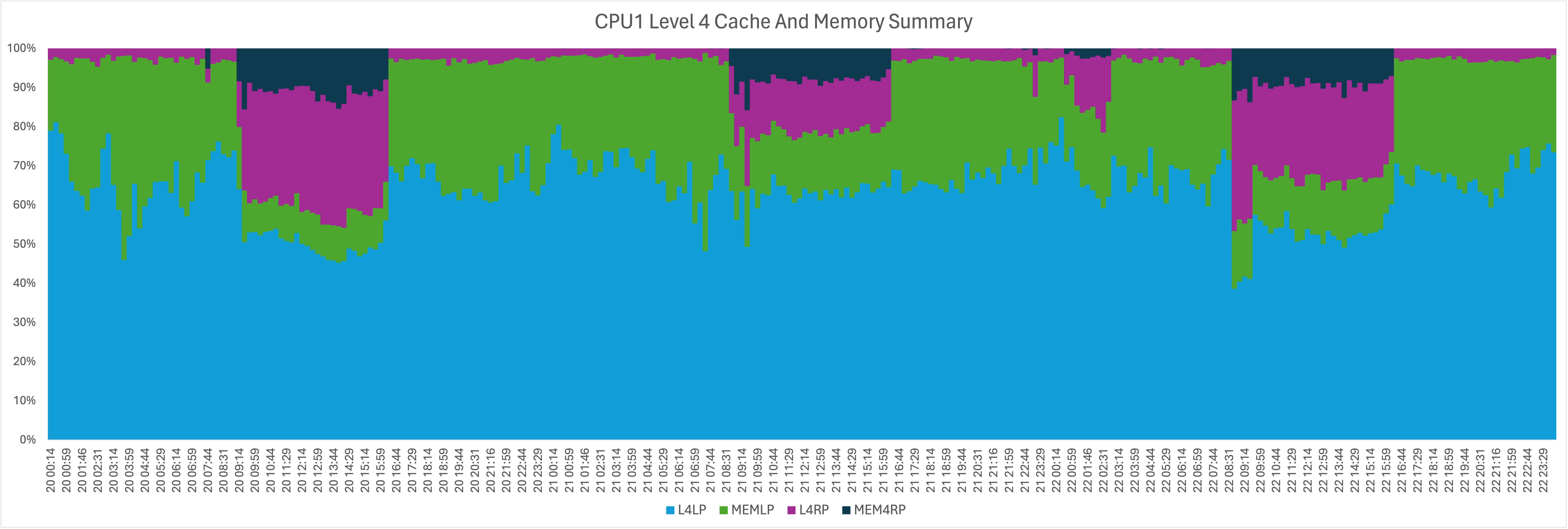

- You can graph cross-drawer memory accesses using John Burg’s formulae

- L4LP and L4RP for Level 4 Cache

- Martin splits MEMP into MEMLP and MEMRP

- But fairly it represents “Percentage of Level 1 Cache Misses”

- Part of Martin’s standard SMF 113 analysis now

- SMF 70

- In short, there is lots to think about when it comes to drawer design and what you put in them.

Topics – Excel Love It Or Hate It

- This section is inspired by Martin’s blog posts

- Sandpapering off the rough corners when using Excel

- What we have used Excel for:

- Marna’s use:

- SMP/E report for HIPERs and PEs – moved to Excel to take notes, tracking data base

- Doing university expenses. Budget, incomes, expenses, and gaps.

- Martin’s use:

- Preparing for and graphing. He does the heavy lifting outside of Excel, creating CSV files for import.

- Graphic automation. Export graph as a picture for the presentation. Use it as your graph creator. CSV input can be hard – dialog is cumbersome.

- GSE submission as a tracking data base. Need in a “portable” format for sharing with others.

- Marna’s use:

- How we use it

- Macros and formulae

- Martin tries to avoid them by doing the calculations externally

- Not really doing “what ifs”

- Basic formulae

- Martin tries to avoid them by doing the calculations externally

- Default graphing scheme

- Martin has to fiddle with any graph automatically generated:

- Font sizes

- Graphic sizes

- Occasionally need a useful colour scheme

- Eg zIIP series are green and GCPs are blue and offline have no fill colour

- Marna hasn’t needed it to be consistent

- Occasional graphing

- Martin’s average customer engagement involves at least 20 handmade graphs

- Some graphs need a definite shading and colour scheme

- Martin has to fiddle with any graph automatically generated:

- Macros and formulae

- What we love about Excel

- Turning data into graphs

- Easy for my most basic uses

- What we hate about it

- Incredibly fiddly to do most things

- Wizards don’t work well for me

- Automation does sand the rough corners off

- But it’s rather tough to do

- Obscure syntax

- Few examples

- Martin tends to use AppleScript (not unexpected)

- Hard to automatically inject eg VBA into Excel

- But it’s rather tough to do

- Over aggressive treating cells as dates and times

- Martin has several times had a bonding experience with customers where both are swearing at Excel.

Customer requirements

- z/OSMF REST API for spawning UNIX shells, executing commands

- To quote from the idea “Motivation: Given that we already have APIs for working with TSO Address Spaces, it seems reasonable that there be a set of APIs that offer much of the same functionality for UNIX address spaces via a shell interface. This would help bring z/OS UNIX on par with TSO, and make it more accessible, especially for modernization efforts.”

- We think this is a nice idea.

- You could automate from lots of places

- Status: Future Consideration

Out and about

- Marna and Martin will both be at the GSE Annual Conference in UK, November 4-7, 2024.

- Martin will be in Stockholm for IBM Z Day.

- Martin will have another customer workshop in South Africa.

On the blog

- W: Marna has published 6 blog posts since the last podcast episode.

- P: Martin has published 9 blog posts since the last podcast episode

- Processor

- Excel