(Originally posted 2012-04-29.)

If you think this title is obscure bear in mind the original working title was "Send In The Hobgoblins". 1 🙂

When I started to write – actually before the "mind mapping" stage – it was going to be all about inconsistency in the way bits of systems are named. You'll see some of that reflected in the finished article (pun intended) but the post has mostly gone in a different direction.

I'd maintain this one is a slightly less obscure title. But I accept it depends on your pronunciation of "CICS". I've heard many nice variants 2 but I'm depending heavily on just one. (And, obviously, it's my preferred one.)

I thought it'd be interesting to do a "thought experiment" 3 on what you can glean about CICS from SMF. This is a necessarily brief discussion – though it might be worth working up into a presentation one day – and I've probably touched on some of this before. If I have I hope I don't contradict myself too badly here. (Strike One for consistency.) 🙂

I'm going to do this two different ways: I'll talk about

This isn't meant to be an exhaustive survey but is more intended to get you thinking. And in particular in the Themes section you can probably think of your own themes.

Data

As with every application address space, CICS regions can be looked at using standard SMF 30 Interval records.4:

-

Most notably, you can identify CICS regions from the program name – DFHSIP – and can establish usage patterns such as CPU and memory.

-

From RMF Workload Activity Report data (SMF 72 Subtype 3) you get WLM setup and goal attainment information. The SMF 30 record also contains the WLM workload, service class and report class names so you can easily figure out which CICS regions are in which service class, etc..

Obviously generic address space information can only get you so far. To go further you need more specific information. I'm going to divide it into three categories:

-

CICS-Specific

-

Other Middleware

-

I/O

CICS-Specific

CICS can create SMF 110 records at the subsystem and the transaction level – both of which can be reported on by specialist tools using CICS Performance Analyzer (CICS PA) or more general SMF reporting tools.

Such information contains subsystem performance information, response time components for transactions and virtual storage.

Other Middleware

You can get very good information about when CICS transactions access other middleware:5

-

For DB2 SMF 101 Accounting Trace gives you lots of information about application performance – as we all know. For CICS transactions the Transaction ID is the middle portion of the Correlation ID (QWHCCV) and the Region is the Connection Name (QWHCCN).6

-

Similarly, Websphere MQ writes application information in the SMF Type 116 record, which can be related to specific CICS regions and transactions.

I/O

Most performance people know about SMF 42 Subtype 6 Data Set Performance records. For data sets OPENed by the CICS region, these records are cut on an interval basis and when the data set is CLOSEd. (This obviously isn't true, for example, for DB2 data.) These records can be used with the File Control information in CICS 110 to see how, for example, LSR buffering and physical I/O performance interact for a VSAM file.

Themes

That was a very brief survey of the most important instrumentation related to CICS. Much of it is not produced by CICS itself. I kept it brief as it's perhaps not the most interesting part of the story: I hope some of the following themes bring it to life.

Naming Convention

(Strike Two for Consistency coming up.) As someone who doesn't know your systems very well it's interesting to me to figure out what your CICS regions are called. And which service classes they're in. etc.

So, to take a recent example, a customer has two major sets of CICS regions cloned across two LPARs. In one case SYSA has CICSAB00 to CICSAB07 and SYSB has clones CICSAB08 to CICSAB15. In the other case SYSA has CICSXY1, 3 and 5 while SYSB has CICSXY2, 4 and 6. Each of these happen to be in their own service class.7

You'll've spotted what I like to call "consistency hobgoblins" 🙂 in this:

-

One alternates between systems. The other has ranges on each system.

-

One starts at zero. The other starts at 1.

The customer took my teasing them about this inconsistency very well – so I don't think they'll mind me mentioning it here (particularly as, apart from them, nobody will recognise the customer).

And actually it doesn't matter – with one minor exception: The application that uses ranges (rather than alternating) would have to perform a naming "shuffle up" if they were ever to add clones. And this is not just a hypothetical scenario.

AOR vs TOR vs QOR vs DOR

You may well be able to tell this from SMF 30 – from the "lightness" of the address space. But it's better to use some of the other instrumentation:

-

Certainly there are "footprints in the sand" for things like File Control in SMF 110 so you could detect a File-Owning Region (FOR).

-

A CICS region that shows up in DB2 Accounting Trace obviously uses DB2 and looks more like a Data-Owning Region (DOR).

-

Likewise for SMF 116 and a Queue-Owning Region (QOR).

Now, regions come in all shapes and sizes and the terms "TOR", "AOR", "FOR" and "DOR" strike me as informal terms – and regions could be playing more than one of these roles so these terms aren't mutually exclusive. But the data is there.

XCF traffic (from SMF 74 Subtype 2) can be interesting:8 I noticed one application's CICS regions showed up in the job name field for XCF group DFHIR000, but not for the other application. I was informed there was a VSAM file this application shared – using CICS Function Shipping I guess.

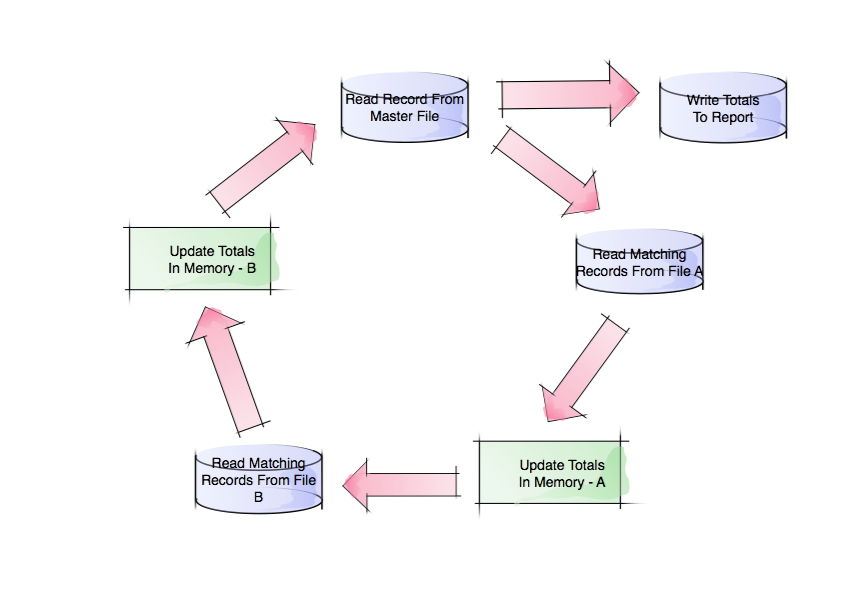

With most topologies there is a unique correlator passed for the life of a transaction through the CICS regions. This correlator (in mangled form) even shows up in DB2. So you can tie together transactions and regions: CICS PA can apparently do this and the next time I get some CICS data in I'm going to learn how to do this. In any case transaction names like "CSMI" (the CICS Mirror transaction) tend to suggest Multi-Region Operation (MRO).

Virtual Storage

I'm reminded of this because in a customer I was able to demonstrate that while both of two applications had Allocated virtual storage of 1500MB the memory backed in one was half that and in the other almost all that. You might deem the former region set moderately loaded and the latter heavily loaded.

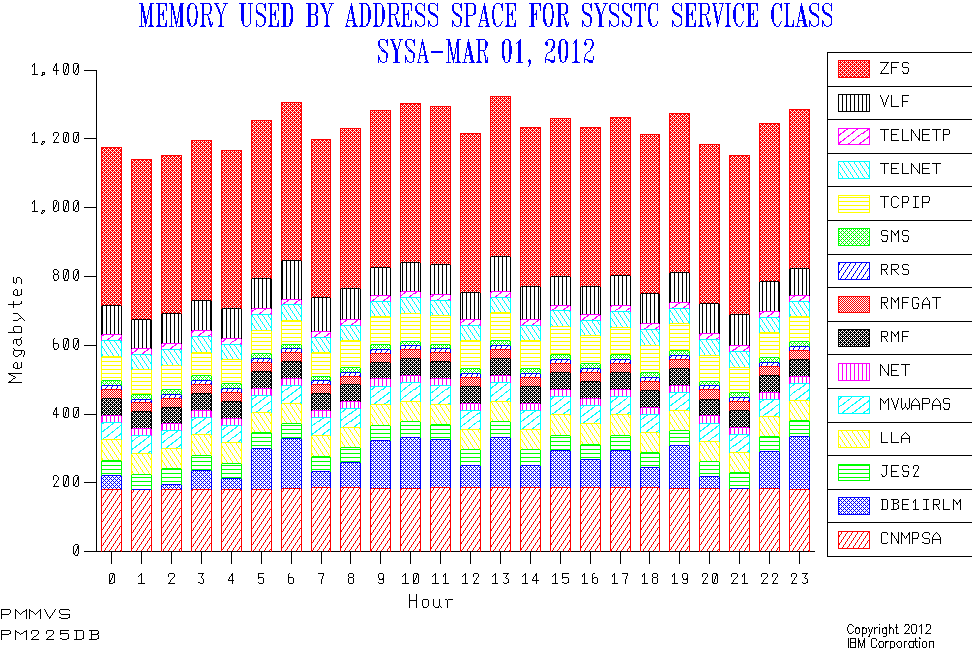

The virtual storage numbers – actually both 24-bit and 31-bit – come from Type 30 Interval records. The real storage numbers also from the same records but with some "interpretational help"9 from RMF 72-3 records.

But Allocated is a z/OS virtual storage concept: As with DB2 DBM1 address space virtual storage it is generally not the same as used. If it were it'd indicate a subsystem or region in trouble. So we need better information on which to make judgements. Fortunately we have it in the CICS 110 Statistics Trace records: You can do a good job of analysing and managing CICS virtual storage with this (just as you can with IFCID 225 data for DB2).

For one of these two applications virtual storage may well be the thing that determines when the regions need to be split.

Workload Balancing

You can see workload balancing in action at a number of levels:

-

At the region level (given a naming convention that lets you identify clones, as above) you can see in Type 30 even CPU numbers, EXCPs etc. If you don't, given supposed clones, you can conclude there isn't some kind of balancing or "round robin" in action – but some other kind of work distribution.

-

From CICS SMF 110 (Monitor Trace) you can see transaction volumes and can aggregate by Transaction ID. So an imbalance could be explained – perhaps because the supposed clones run different transactions or some transaction is present in all but at different rates in each clone. Or some other explanation.

-

Even without SMF 110 (which a lot of installations don't collect) DB2 Accounting SMF 101 could give you a similar picture (as might MQ's SMF 116).

So the "work distribution and balancing" theme can be addressed readily.

QR TCB vs Others

I mentioned above that virtual storage can sometimes drive the requirement to split CICS regions (whether cloned or not). The Quasi-Reentrant (QR) TCB can be another driver.10

Traditionally all work in a CICS region ran on the single QR TCB therein. And then File Control was offloaded from it. And the rest, as they say, is history.11

Then as now, if the QR TCB approaches 70% of a processor performance can begin to degrade markedly. For this reason TCB times are documented in the SMF 110 CICs Statistics Trace record. I regularly see CICS regions with more than 70% of an engine (from SMF 30) but to do this an installation needs to understand (using the 110) how much is really the QR TCB.

Without the 110's, again you could work with SMF 101 and 116 for DB2 and MQ, respectively. In fact I often do.

So, I've tried to give you a flavour of what you can learn about a CICS installation from SMF. i.e. without going near the actual regions themselves. This is indeed just a flavour.

On the "inconsistency" point, consistency isn't vital but good naming conventions have real value. It's an old joke that goes "we like naming conventions so much we have lots of them, some of which contradict each other". 🙂

There are plenty of other examples where there are inconsistencies. A good one is LPAR / z/OS system names. I've seen several customers with the following kind of scenario: "Our systems are called things like A158, SYSC, DSYS, Z001 and MVS1." And it's not just LPAR names and CICS region names, of course.

The inconsistencies in installations often reflect history. And a notable category is Mergers and Acquisitions. (The LPAR names example above is often caused by this.) I'm really impressed at what customers manage to achieve when they do something like this: Getting it to work reliably is the most important thing. Homogenisation of names should be and is secondary.

I really like to see traces of the history in the systems I examine. Some of you reading this have been with me on the journey of your systems' lifetimes for a long time now: I wonder how much history we each remember. 🙂 Next time you see me ask me to pull out some slides from previous engagements: When I do this people are astonished by how much hasn't changed and how much has.

As you possibly spotted that was "Strike Three" for consistency in this post so I guess I'm out. 🙂 This was indeed going to be a post about consistency but took a different direction, as I said. I hope you found the "CICS nosiness" aspect interesting and useful. If you do I might well turn it into a set of slides and add some more material. If you have anything to add I'd be interested in hearing about it – whether you're from Hursley12 or not.

Footnotes

1 The reference here is, of course, 🙂 to Ralph Waldo Emerson's essay "Self-Reliance" where he wrote "a foolish consistency is the hobgoblin of little minds".

2 Such as "kicks", "chicks", "thicks", "six" and "sex" (no, really). 🙂 And my least preferred one is "see eye see ess".

3 If you think I'm self-consciously channelling Einstein here you'd be wrong: It's actually Mao. 🙂 Because the thought experiment is no substitute for experience – according to "On Practice".

4 Actually I doubt the utility of SMF 30 Interval records for batch jobs.

5 I believe you can get data from IMS relatable to CICS transactions – but I know relatively little about IMS.

6 And you can tell a CICS-related 101 record because the value of the QWHCATYP (Connection Type) is QWHCCICS. Further, you can tell things about sign ons from the QWACRINV field value.

7 You might not know this but the SMF 72-3 record has the Service Class Description character string – from the WLM policy. I'm slowly evolving my charting to use the description. Time to clean it up, folks. 🙂

8 While you get member name in 74-2 (and I'm proud to say I got job name in as a more useful counterpart) you don't get "point to point" information: You just get the messages sent from and to the XCF member. Figuring the actual topology out by matching message rates is fraught. I'd love an algorithm that was effective (or efficient) at this.

9 What I mean by this will have to await another post – some time.

10 26 years ago I worked on CICS Virtual Storage at a Banking customer. Not a lot has changed. 🙂 20 years ago I was involved in enabling customers to take advantage of multiple processors by splitting regions as described in this section. Again, not a lot has changed. 🙂 But this is unfair because the Virtual Storage and CPU pictures have changed a lot.

11 Or is it hysteria? 🙂

12 Home of CICS and Websphere MQ Development