(Originally posted 2016-08-11.)

(Reposted without change as I accidentally deleted it while getting rid of a SPAM comment.)

Episode 5 had a different feel for me. It was our first “trip report” episode, and it felt much looser for that.

In fact the sound effects between topics could’ve been elided but for now I’m sticking slavishly to the format. It didn’t feel too artificial to me.

I’m conscious that most of my readership and our listenership (and the stats prove you exist, as I said in the show) weren’t in Munich.

I think, though, there are things that non-attendees will find valuable or at least enjoy.

People probably think I like the sound of my own voice; The reality is I’m coming to like it. 🙂 Nobody likes how they sound recorded. But the conventional wisdom – that you get used to it – seems to be true.

Thanks to our friend Margaret Moore Koppes for “playing Paparazzi”. 🙂

And the audio production gimmick is subtle this time. 🙂

Below are the show notes.

The series is here.

Episode 5 is here.

Episode 5 “The Long Road Back From Munich” Show Notes

Here are the show notes for Episode 5 “The Long Road Back From Munich”. Here is the link back to all episodes: Mainframe, Performance, Topics episodes.

The show is called “The Long Road Back From Munich” because we’ve both returned from a successful z Systems conference 2016 IBM z Systems Technical University, 13 – 17 June, Munich, Germany. For one of us the journey was much longer back than for the other one.

Mainframe

Our “Mainframe” topic was Marna’s z/OS observations from the conference:

*IBM HTTP Server Powered by Apache*: it seemed about 30–40% were impacted by the move from the Domiino to Apache server. More than hoped, but if you work on it while on z/OS R13 or V2.1, you’ll be well-positioned for z/OS V2.2.

*zEvent sessions*: Martin and Marna both went to Harald Bender’s zEvent session where he discussed using your mobile device (either Apple or Android) to receive timely information about events on your z/OS system. The handouts are here: zEvent and z/OS Console Messages to Your Mobile Device . This app was so easy to download and start using, Martin did just that during Harald’s session!

*z/OSMF*: Marna was happy with the interest in z/OSMF, and with the z/OSMF V2.2 enhancements rolled back into z/OSMF V2.1 in PTFs from January 2016 PTF UI90034.There is no reason to delay using it. The z/OSMF lab for SDSF, however, had a problem as CEA had gotten its TRUSTED attribute removed somehow before Munich. After it was made TRUSTED (after the conference), everything was fine again. Goes to show how important the security settings are for z/OSMF!

*z/OS V2.2*: Good interest in the release. Happy to see so many people already running z/OS V2.

*Secure electronic delivery*: Since regular FTP for electronic delivery was removed on March 22, 2016, only secure delivery is available. No one at the conference said they were impacted, which was nice to see.

Performance

Our “Performance” topic was Martin’s performance observations from the conference:

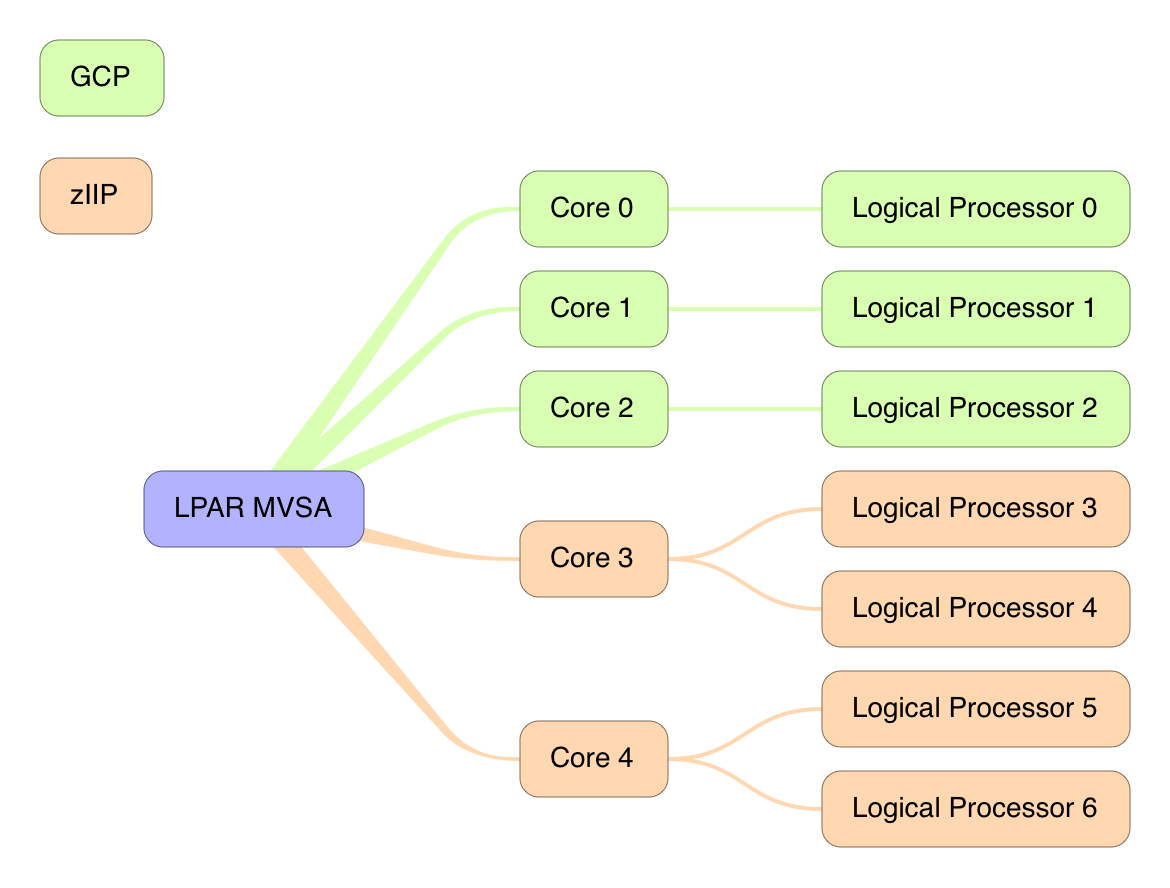

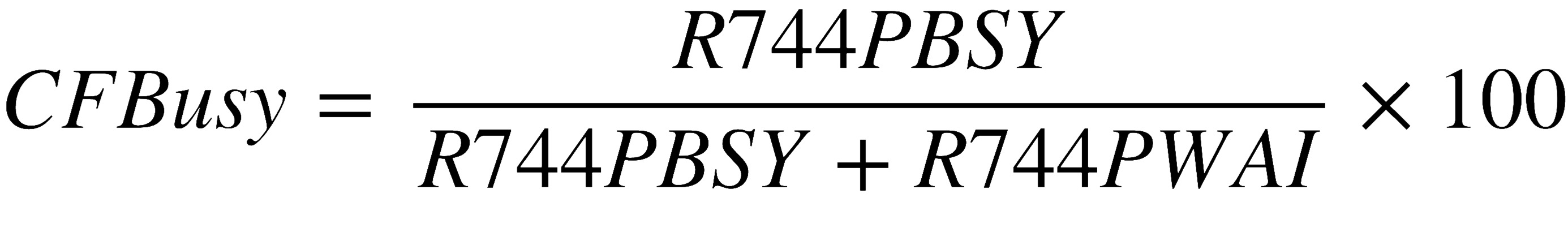

*State Of SMT Instrumentation Knowledge*: Simultaneous Multi Threading (SMT) metrics are not well understood at this point. Customer data from turning on SMF (for both zIIP and IFL) is starting to appear on Martin’s desk. The good news is that the pickup on this function is fast.

*His Presentations*: Martin’s sessions were nicely attended. Martin is continuing with his fun at looking at DDF, and “He Picks on CICS” might have more information to be added in the future.

The presentations can be found on Slideshare:

Topics

In our “Topics” section we discussed various other conference observations:

*Martin presented sessions from his iPad.*: Although a lot of cables had to be carried around, it did work fine. He even used his Apple Pencil to mark on the slides during his presentations. So he might never lug a laptop to a conference again. Famous last words!

*Conference poster sessions*: What a success! Martin & Marna had a poster about…wait for it…this podcast. Martin was very busy talking to people who were interested in our poster. Marna also had a poster on using MyNotifications for New Function APAR notification: New Function APAR Notifications .

We tried out a QR code for our podcast, and it worked for most all people.

Paparazzi were there to take photos of some famous folk that stopped by the poster sessions:

.

.

Where We’ll Be

Martin is taking a well deserved vacation for July, so there’ll be no new podcast episodes in July. But we promise to return early in the Autumn!

Marna is going to SHARE in Atlanta, August 1–5, and IBM Systems Symposium in Sydney Australia (August 16–17).

On The Blog

Martin posted to his blog, since our last episode:

Contacting Us

You can reach Marna on Twitter as mwalle and by email.

You can reach Martin on Twitter as martinpacker and by email.

Or you can leave a comment below.