(Originally posted 2017-12-03.)

In my “Parallel Sysplex Performance Topics” presentation I have some slides on Coupling Facility Processor Busy. I’ve worried about including them, considering them borderline boring.1

In my head I justified them because they:

- Help people understand Structure Execution Time (R744SETM).

- Help people see the changed behaviour with Thin Interrupts.

For both of those topics it’s been enough to take a “whole Coupling Facility” view, aggregating over all the Coupling Facility’s processors.

But, and not many people know this, RMF documents the picture for individual processors in SMF 74 Subtype 4. The reason this isn’t widely known is mainly the reports don’t show this level of detail.

One can speculate why this level of detail exists. My take is that it was relevant long ago when we had “Dynamic ICF Expansion”.2 This feature allowed an ICF LPAR to expand beyond the ICF pool into the GCP Pool. Performance Impacts of Using Shared ICF CPs describes this feature. (The document is from 2006 but it does describe this one feature quite well.)

There, you’d want a better picture of Coupling Facility processor busy than just summing it all up. In particular you’d want to know if the GCP engines had been used.

What Is Coupling Facility Processor Busy?

This seems like a silly question to ask, but it isn’t.

If you were to look at RMF’s Partition Data Report for the ICF pool you’d find dedicated ICF LPARs always 100% busy. And it’s not just because they’re dedicated. It’s because the Coupling Facility spins looking for work. So that’s not a useful measure – for dedicated ICF LPARs3.

So a better definition is required, and thankfully RMF provides one. There are two relevant SMF 74 Subtype 4 fields:

- R744PBSY – when the CF is actually processing requests.

- R744PWAI – when the CF is not processing requests but the CFCC is still executing instructions.

Using these two the definition of busy isn’t hard to fathom:

Coupling Facility Busy % = 100 * R744PBSY / (R744PBSY + R744PWAI)4

This, as I say, is normally calculated across all processors in the Coupling Facility.

By the way, you might find Coupling Facility Structure CPU Time – Initial Investigations an interesting read. It’s only 9 years old. 🙂

Processor Busy Considerations

But let’s come almost up to date.

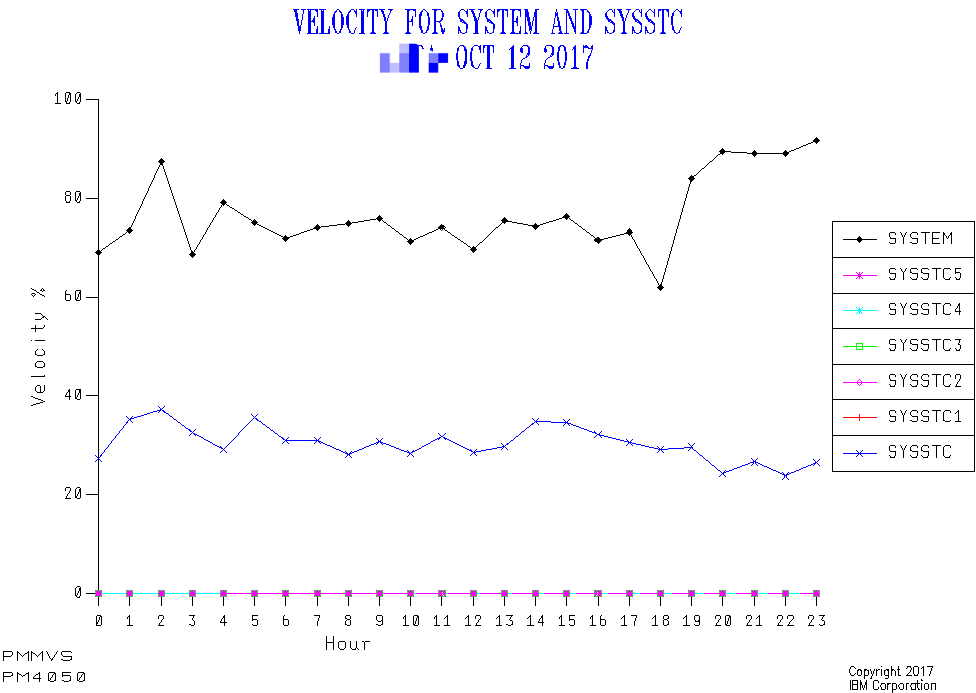

I recently looked at a customer’s parallel sysplex and got curious about engine-level Coupling Facility Busy, so I prototyped some code to calculate it at the engine level. I not only summarised across all the RMF intervals but plotted by the individual 15-minute interval.

Here is the summarised view for one of their two 10-way z13 Coupling Facilities:

The y axis is Coupling Facility Busy for the engine, the x axis being the engine number.

So clearly there is some skew here, which I honestly didn’t expect. By the way, at the individual interval level the skew stays about the same. Indeed the same processors dominate, to the same degree.

A couple of points:

-

At this low utilisation level the skew doesn’t really matter as no engine is particularly busy. However, we like to keep the Coupling Facility as a whole below 50% busy. Part of this is about “white space”5 but it’s also about everyday performance. I have to say I’ve not seen a case where Coupling Facility busy caused requests to get elongated, but that means nothing. 🙂 So, I’d like to suggest that individual engine busy needs measuring, to ensure it doesn’t exceed 50%. This is a revision of the “whole CF” guideline. But at least the data’s there.

-

This is a 10-way Coupling Facility. It would be better, where possible, to corral the work into fewer engines. Perhaps fitting within a single processor chip. In this customer’s case there’s a spike which means this isn’t possible. Working on the spike’s the thing.

CFLEVEL 22

Now let’s come really up to date.

z14 introduced CFLEVEL 22. One area of change is in the way work is managed by the Coupling Facility Control Code (CFCC). In particular, processors have become more specialised. This is to improve efficiency with larger numbers of processors in a Coupling Facility.

CFLEVEL 22 introduced “Functionally specialized” ICF processors for CF images with dedicated processors defined under certain conditions:

- One processor for inspecting suspended commands

- One processor for pulling in new commands

- The remaining processors are non-specialized for general CF request processing.

This avoids lots of inter-processor contention previously associated with CF engine dispatching.

If there are going to be specialised engines I’d expect more skew than before. At this stage I’ve no idea whether the two specialised processors are going to be busier than the rest or less busy6. Further, I don’t know how you would manage down the CPU for either the specialised processors, nor the rest. Maybe the state of the art will evolve in this area.

Note: There’s no way of detecting a processor as belonging to one of these three categories.

So, this makes it even more interesting to examine Coupling Facility Busy at the individual engine level. I’ve not yet seen CFLEVEL 22 RMF data, but at least I have a prototype to work from, whether the customer’s data is at the CFLEVEL 22 or not.

Stay tuned.

-

And if I think that, goodness knows what the audience thinks. 😦 ↩

-

z10 was the last range of processors to have this. ↩

-

These fields are in microseconds, though it doesn’t matter for the purposes of this calculation. ↩

-

So we can recover structures from failing Coupling Facilities. ↩

-

And I’ve no idea which of the two would be the busier one. ↩