(Originally posted 2016-05-07.)

You probably wonder why I post to my blog echoing our podcast episodes. There are two reasons:

- Yes, it alerts more people to our podcast series. Well duh. 🙂

- It gives me a chance to inject something more personal about the episode.

So in the latter spirit I’d say the highlight for me of making Episode 3 was getting the “three part disharmony” 🙂 working.

Glenn Wilcock very kindly agreed to be our first guest and having a guest raised an interesting problem:

How do you edit with 3 people? So we could’ve gone all mono on you. I’m not keen on that. Indeed I would encourage listeners to use headphones if at all possible – as I’m playing games with the “stereoscape”. As a Queen fan I’ve been spoilt. 🙂

When I edit I place myself on the left (naturally) 🙂 and (unfortunately for her) I consign Marna to the right. 🙂 So, obviously, a guest has to be placed in the middle.

Now, when it’s just the two of us I use a piece of software on my Mac to record the skype call. It captures the video but Audacity (our audio editor of choice) will extract the stereo audio from that. I appear on the left and Marna on the right.

The trick with more than two people is to ask everyone to record the Skype call and send me their files. Then I can use Audacity to throw all the “right channel” stuff away. And then I’m in business.

I think you’ll agree this worked really well in this episode.

But if a participant can’t record then we have to fall back on “punching out” each contribution to make a fresh mono recording for them. Cumbersome and requiring clear separation between the contributions.

But Glenn was able to furnish his own recording so all was good.

Arrogantly enough 🙂 I’ve offered my support to others in IBM in getting going with podcasting. “Learn from what I’ve learnt, even though I’m only just ahead of you” has long been my modus operandi.

Below are the show notes.

The series is here.

Episode 3 is here.

Episode 3 “Getting Better” Show Notes

Here are the show notes for Episode 3 “Getting Better”.

Follow Up

We had some follow up items:

- Following up the Episode 1 “Topics” item on Markdown, Martin talked about John Gruber’s Daring Fireball Dingus page which lets you paste in Markdown and see the HTML generated from it (and how the HTML is rendered).

- Also following up on an Episode 1 item, but this time the “Mainframe” item, Marna talked about her personal problem with ISPF 3.17 Mount Table right / left she was seeing before. It was the PF key definition (which she suspected all along), however defining 12 PF keys did the trick.

Mainframe

Our “Mainframe” topic included our first guest, Glenn Wilcock, a DFSMS architect specializing in HFS. Glenn talked about a very important function – using zEnterprise Data Compression (zEDC) for HSM. He discussed some staggering excellent numbers for CPU reduction, throughput improvement, and storage usage reduction. A win on all three fronts. Here are some of the links that you can use to get more information about this topic:

Performance

Our “Performance” item was a discussion on one of Martin’s “2016 Conference Season” presentations: “How To Be A Better Performance Specialist”. Two particular points arising:

- This presentation might interest a wider audience than just Performance and Capacity people.

- You might get something out of it even if you’ve been around for a while.

We’ll publish a link to the slides when they hit Slideshare, probably after the 2016 IBM z Systems Technical University, 13 – 17 June, Munich, Germany.

Topics

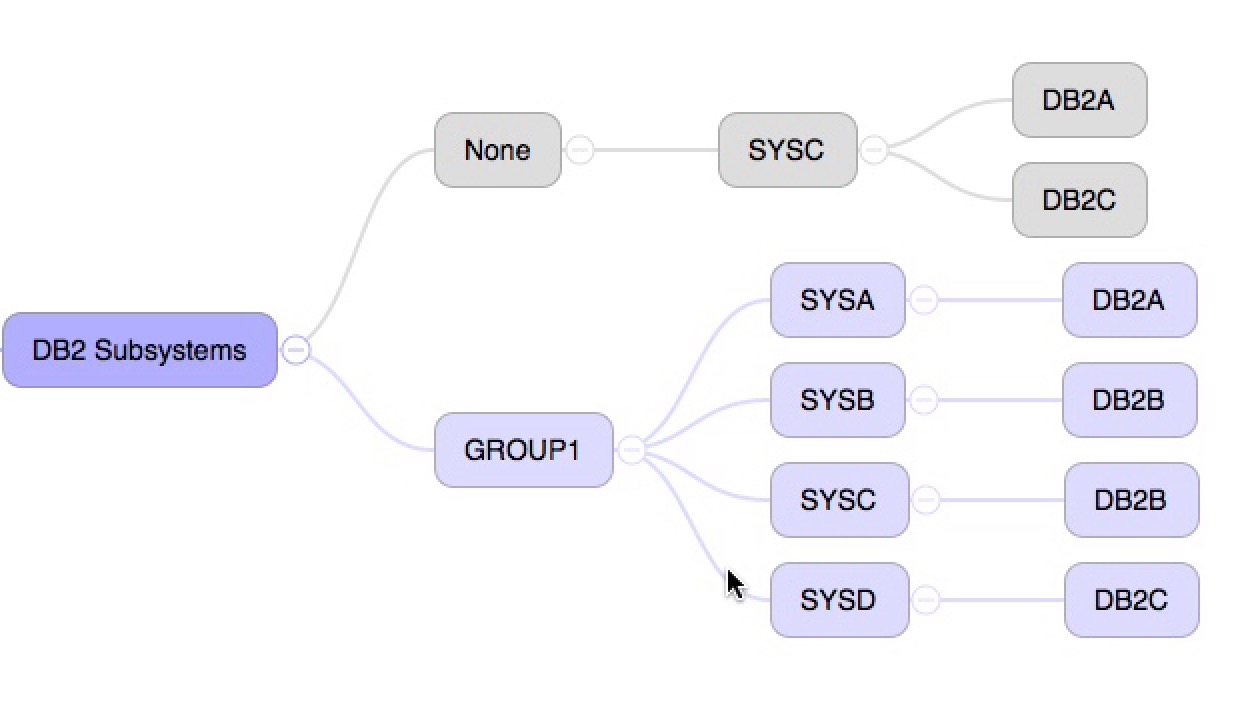

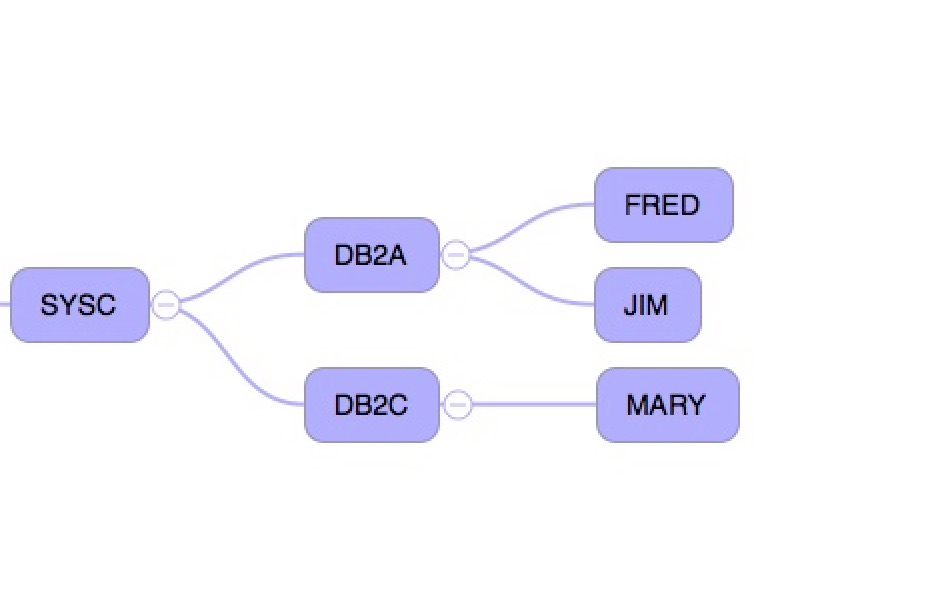

Under “Topics” we discussed mind mapping and how we use it for this podcast and other uses, such as depicting relationships between CICS systems and the DB2 subsystems they attach to.

Martin mentioned Freemind, an open source cross-platform mindmapping tool, available from here.

He also mentioned the proprietary iThoughts, which has an iOS Version and a macOS version. Data is interchangeable between these two and Martin uses both versions, with the iOS version on his iPad Pro and his iPhone.

On The Blog

Martin posted to his blog:

Marna posted to her blog:

-

Are you electronic delivery secure?.

Warning!!! Regular old ftp for electronic software delivery will be gone on May 22, 2016, for Shopz and SMP/E RECEIVE ORDER. Find a secure replacement.

Contacting Us

You can reach Marna on Twitter as mwalle and by email.

You can reach Martin on Twitter as martinpacker and by email.

Or you can leave a comment below.