(Originally posted 2015-05-04.)

This post is one where I really don’t speak for IBM.

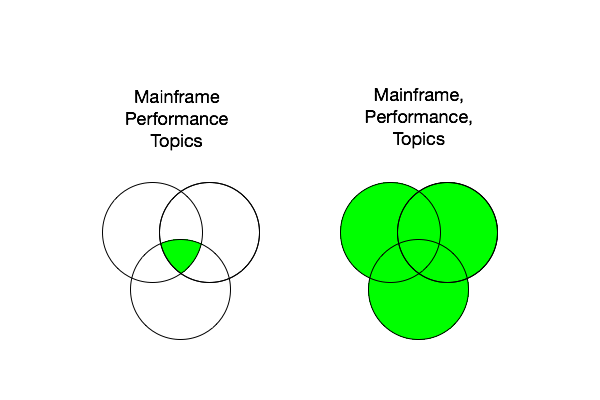

It’s also one that’s firmly in the “Topics” category of “Mainframe, Performance, Topics”, being about the emergent fields of iOS Automation (and Web Automation).

And, while I give Remember The Milk a “could try harder” score I’m actually a big fan of what they do.

Like many things I’m a big fan of I think of ways they could do stuff better – and try to make sure such dreams have a practical utility.

Remember The Milk

In brief Remember The Milk is a platform-independent cloud service for managing To Do lists (and lists in general).

It’s not the only one but it’s the one I use on Linux, OS X and iOS.

It has an iOS app and a web interface;

I use both extensively.

You can email tasks (and lists of tasks) into it.

You can even set Siri up to add a task to Remember The Milk, instead of to Reminders.

You can have subtasks and start and due dates and estimates and notes attached to each task.

I mostly just use the end dates at present, though I have a few notes.

Some tasks are recurring and I move the due data manually to e.g. 1 week later when I’ve done it.

I also move tasks around anyway, again manually.

I also complete tasks – occasionally. 🙂

As a cloud service I can’t put anything IBM Confidential in it, and I won’t put anything sensitive of my own in it.

iOS Automation

Until relatively recently there wasn’t much you could do to automate tasks on iOS, still less to glue them together.

Now why might you want to do that?

Mostly to enable function that is fiddly, overly manual or slow to do otherwise.

I listen avidly to three podcasts that cover topics relating to iOS and OSX and the first two of these have recently (independently) done episodes on iOS Automation:

Mostly I listen to these while running, though occasionally in the car or on a plane.

Usually somewhere where noting down the inspirations I get from them is difficult. 🙂

(I’ve yet to dictate a To Do via Siri while running.) 🙂

My first brush with iOS Automation was with Editorial as discussed in

Appening 3 – Editorial on iOS.

Then along came Workflow on iOS, which has some amazing choreographic capabilities, with more apps being manipulated all the time.

In a similar vein is Schemes.

And then Drafts – which has javascript (comparable to Editorial’s Python) hove into view.

(It’s been around for a while but I’ve only just got into it.)

Meanwhile iOS 8 brought lots of functions, with ability to build extensions for apps.

And the ability for third-party apps to add function to the Today screen.

(Workflow allows you to build app extensions, for one.)

The Today screen piece is interesting as Drafts as well as launcher apps such as Launcher can launch from there.

Launcher apps allow you with a push button to launch apps, in many cases with specific parameters.

Greg Pierce of Drafts fame introduced the x-callback-url specification which launchers tend to use.

But it’s not just launchers:

All the automation vehicles I’ve already mentioned use them.

Meanwhile, (largely) outside of iOS, IFTTT has web-based automation.

I’ve a few workflows which use IFTTT

but mostly I’m interested in iOS automation as it doesn’t require the web, nor suffer from any such latency.

Automatic For The People?

But not Remember The Milk.

All I can do is email tasks to Remember The Milk (or SMS to it).

I can’t query it.

And I can’t open its app with any control.

So I have used the email route:

- With Launcher and Workflow I can take the clipboard contents and email them as a task – from the Today screen.

(I use this for quick thoughts and they end up in my Inbox for later refinement and classification.)

- With Drafts I have some javascript code that takes a template note and replaces ‘%c’ with a user-provided customer name (generally “Client X”) and emails that to Remember The Milk.

(I’ve yet to integrate this into Drafts on the Today screen.)

These work fine but are limited.

Here are some examples of what I can’t do:

- I’d like, with a single tap, to open Remember The Milk app at the “Shopping List” list. Or “Today” or “Cust Sitns” or whatever.

Launcher could do that if RTM supported x-callback-url.

- I’d like to query a list, take the first item that hasn’t been completed, and kick off some automated action based on it.

For example “kick off study” might include automation to create the various topic presentations in Dropbox.

(Such as “CPU”, “Memory” etc.)

- I’d like to automatically mark a task as completed.

- I’d like to construct a web of dependencies for a project.

But none of these things are doable with Remember The Milk right now.

All for the want of some automation capabilities, most notably x-callback-url.

The TaskPaper Alternative

The Nerds On Draft podcast and the support in Editorial persuaded me that TaskPaper might be in my future.

If you don’t know what Taskpaper is see Deconstructing my OmniFocus Dependency and

The TaskPaper R&D Notebook.

TaskPaper is a text-based Task List format, which can readily be automated and sync’ed across many devices via Dropbox.

Intriguingly, the Sublime Text text editor which I use on Linux and OSX has a plugin – PlainTasks –

which allows you to manipulate TaskPaper files.

(It also allows you to write plugins in Python.

What’s not to like? 🙂 )

But doesn’t this sound a little geeky? 🙂

I’m all for formats that can be read and updated by many standard tools.

But I really don’t want to have to assemble too much of the basics myself.

My Challenge To Remember The Milk

So, my challenge (for what it’s worth) to Remember The Milk is: Embrace automation and people will build wonderfully unexpected, valuable, things with your product or your service.

And you can be sure I’ll cheer you on.

After all I’m in your beta programme.

So I would build workflows and I’d definitely publicise the capabilities you build with them, but in a personal capacity.

And that applies to just about any product or service, iOS, Web, whatever.

Make it Automatic For The People. 🙂

Which is pretty ironic when you consider iOS devices were conceived as the ultimate in “hands on” machines. 🙂

Breaking News

I just got a Workflow workflow working 🙂 that watches Dropbox for a specific file having contents.

I think I can do quite a bit with this, such as if Linux finishes a task this status file can be updated and some Editorial action kicked off on iOS.