(Originally posted 2014-06-03.)

Sitting in Dave Gorman’s Broker V9 presentation in Budapest it struck me it would be a useful exercise to apply the “Systems Investigation” techniques I write about to Broker running on z/OS. So let’s see how far we can get with SMF 30 Interval records, in the vein of Life And Times Of An Address Space. It’s a nice exercise [1] but I think it’s directly useful for looking at Broker itself.

By the way the name IBM Integration Bus is in use as of V9, but I’ll persist with “Broker” in this post.

I have two sets of customer data with Broker in, one active and one where Broker is up but not active.

What Is Broker?

Broker is a multiplatform product family that allows business information to flow between disparate applications across multiple hardware and software platforms. Rules can be applied to the data flowing through the message broker to route and transform the information. The product is an Enterprise Service Bus providing connectivity between applications and services in a Service Oriented Architecture.

The previous paragraph is mostly not my words. In my words I would say you get to connect disparate applications together using pipeline-like constructs called flows. These flows have nodes, akin to pipeline stages.

As well as running on other platforms Broker runs on z/OS. It writes Statistics and Accounting data in it’s own SMF 117 record (but this post isn’t about that).

Am I Broker?[2]

An address space is Broker if one of the following sets of conditions is met:

- The program is BPXBATA8 and the Proc Step Name is one of “BROKER”, “EGENV” or “EGNOENV”.

- One of the SMF 30 Usage Data Sections for the address space had a product name of WMB.

These conditions are corroborative but the second condition is possibly simpler to detect than the first.

All the address spaces for a Broker instance have the same job name (but, obviously, different job IDs).

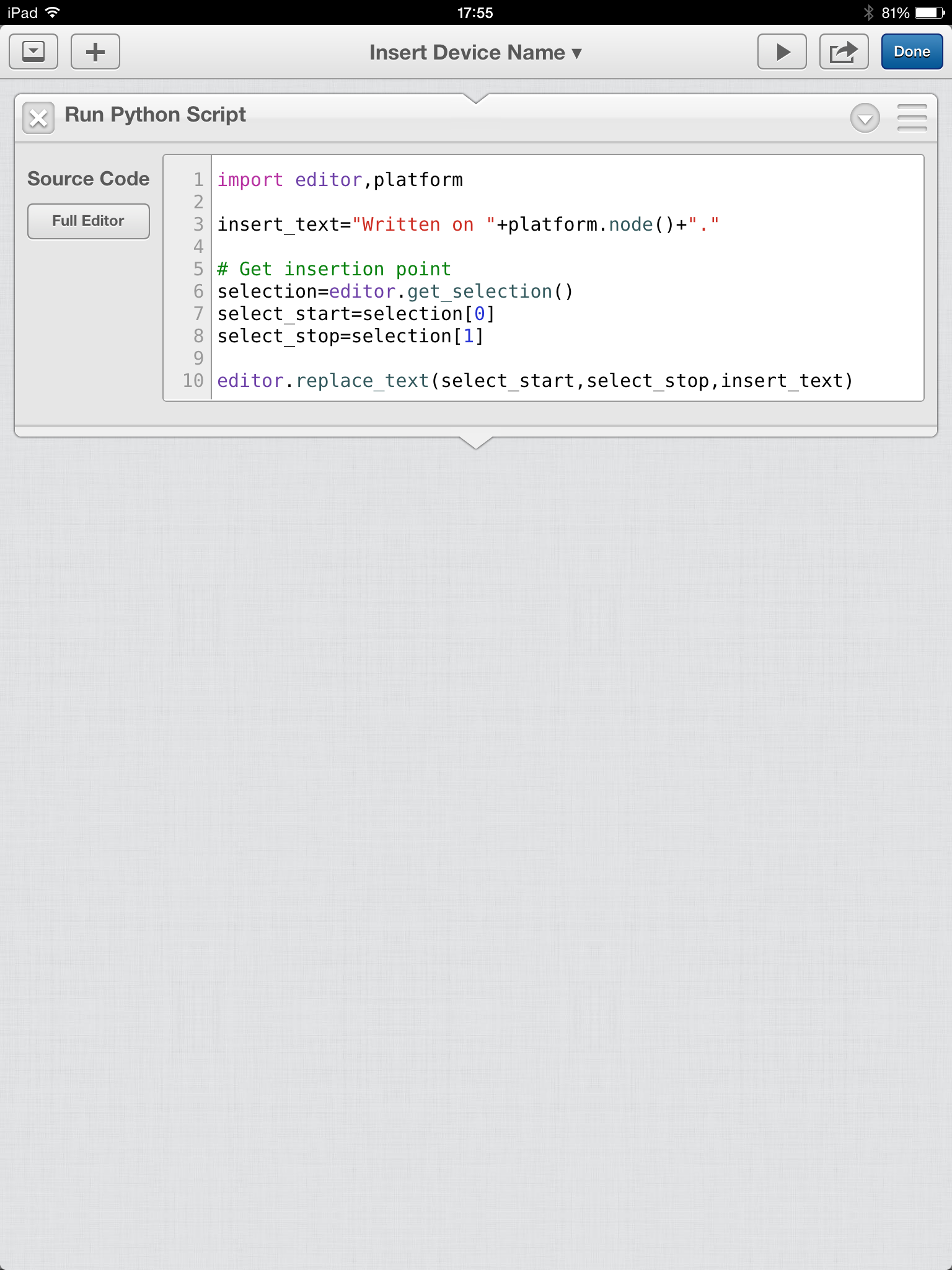

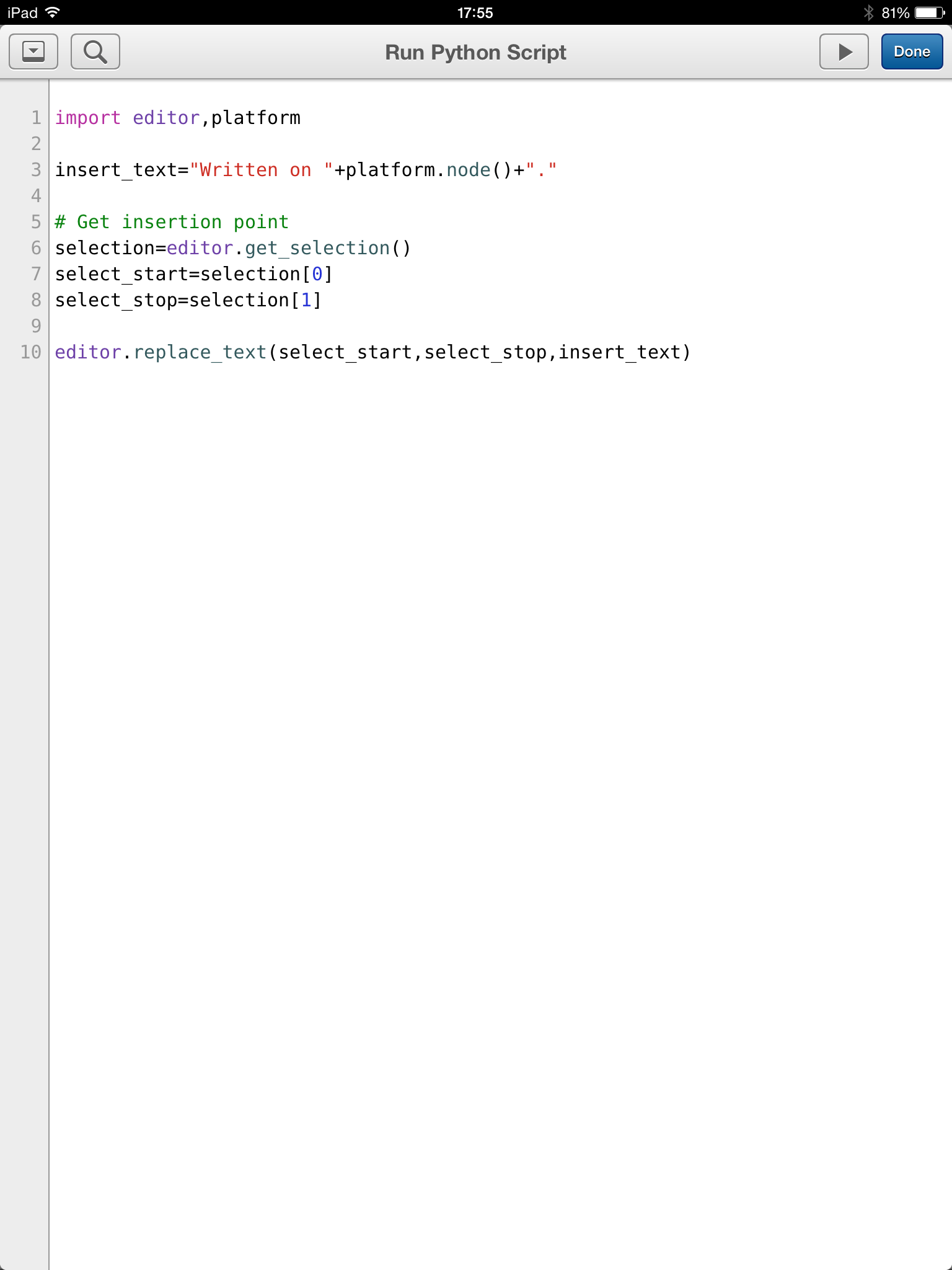

The structure of a Broker instance is as shown here:

Broker Instance BRK1 has a Control Address Space and three others.

Execution Groups

Broker flows run inside Execution Groups, each of which is an address space. The step name is different for each Execution Group, being the last 8 characters of the Execution Group Name.[3]

In the two sets of data one has a handful of Execution Groups, each with a mnemonic name.[4] The other has no Execution Groups, so no flows can be deployed to this one.

In the example diagram above, there are three Execution Groups: Tom, Dick and Harry. Each has its own flows.

There’s one further piece of information we can glean about execution groups:

If the Proc Step is EGENV the Execution Group has its own specific profile. If it’s EGNOENV it doesn’t. In the case of the customer with Execution Groups they are all EGNOENV.

Which Broker?

The Broker instance is given by the job name which, as I said, is the same for the Control Address Space and all the Execution Groups.

Which Version?

You can use the Usage Data Section to establish the Broker version, except I’m seeing “NOTUSAGE” in both the sets of data I’ve seen – which doesn’t help distinguish Version 7 from 8 from 9. But I’ve only got two sets of data…

CPU

Drilling down into individual address spaces / Execution Groups pays dividends when it comes to CPU:

For the customer with a handful of Execution Groups only two use significant amounts of CPU. The total is about 3.5 engines’ worth and one Execution Group uses 60% of that and the other uses 40%.

There was a tiny amount of zIIP CPU usage in the active case, also. As you can write nodes in java that’s not surprising. You can also access DB2 in a flow but whether it’s the right kind for DRDA zIIP Eligibility I don’t know.

Memory Usage

There’s good news here:

Because Broker is 64-Bit the vast majority of virtual storage is allocated above the bar and memory (and Aux / Flash) usage numbers are accurate. For 24-Bit and 31-Bit Virtual Storage I can only see Allocated but, as there’s not much of it, I can live with treating that as Used Real without too much overstatement.

The LE Heap is the main user of memory, and thank goodness it’s 64-Bit: I’m seeing values from a few hundred MB to several GB. In the customer with no Execution Groups much of this is paged out to Aux. I can tell this because, as I said, 64-Bit Virtual is reported in SMF 30 as either backed by real memory or Aux / Flash.

Who Do I Talk To?

As those of you who’ve seen me present Life And Times Of An Address Space know I see two main ways of figuring out who an address space talks to, without going deeper than SMF 30:

- Usage information in SMF 30.

- XCF Member information in SMF 74–2.[5]

In the data I’ve seen Broker doesn’t directly use XCF signalling (and I think that’s generally true of Broker) so I don’t expect 74–2 data to show anything.

I do see Usage information for other products associated with the address spaces:

- In one case I see DB2, Websphere MQ and Websphere Transformation Extender (WTX).

- In the other case I just see DB2 and Websphere MQ.

In both cases I see the DB2 and MQ versions and subsystem names. In the WTX case I again see “NOTUSAGE” but this is a single data point.

Workload Manager

I see, of course, WLM Workload, Service Class and Report Class in SMF 30. One of the features of Broker is you can classify each Execution Group (address space) separately to WLM. I’ve not seen it done but I’m certain that would be reflected in SMF 30.

I/O and Database

In the case of the customer with active flows I see quite a high EXCP rate (290 per second), with one Execution Group performing about 90% of this. I also see a small amount of Unix File System I/O, this time mainly in a different Execution Group.

I would expect I/O to vary depending on the nature of the flows.

I was not in a position to look at DB2 but some flows process SQL so I would expect DB2 Accounting Trace to be of some use here.

Handling Multiple Address Spaces With The Same Name

As I said, a complete Broker Instance comprises a set of address spaces, each with the same name. My code generally summarises all the address spaces with the same name into one row per reporting interval (or higher). That’s what the SLR Summary Table does.

In this case that level of summarisation is unhelpful. So I retained the Log Table, which does keep separate JobIds separately and wrote reporting to go against this log table – but only if the sum of In and Out address spaces with a given name is more than 1.

It more or less doubles the size of my performance database doing it this way. But for cases like this it’s worth it.

There are some other cases where this approach might well yield dividends. A good example might be DB2 Workload-Manager Stored Procedure address spaces (which I tend to term Server Address Spaces). Potentially these can be legion, with the same job name.

Conclusion

I think you can do quite a bit with detecting and analysing Broker. To really go to town on it you do need SMF 117 (or the Distributed equivalent), of course. And I don’t yet know what DB2 Accounting Trace (SMF 101) would reveal.

I haven’t, in this post, written about a time-driven view using SMF 30. After all these are Interval records. I’m about to teach my code to pump out some graphs that will help me do that. Stay tuned.

It’s been an interesting exercise which has stretched my code[6]. I’m, in parallel, applying the code and techniques to CICS, CTG, DB2, MQ, and IMS [7] groups of address spaces. I might write about some of those too. Again, stay tuned.

-

Which should make it interesting and useful for z/OS customers who don’t have Broker on z/OS. ↩

-

As opposed to Broken. 🙂 ↩

-

The Control Address Space name, in SDSF, is the same as the job name, being the Broker name. In SMF 30 Interval records, however, it just says “STARTING”. ↩

-

Actually they have two LPARs, each with a Broker instance on. The Execution Groups are the same in each, except one Execution Group where the two instances have slightly different spellings on the name. I’m not sure if this is deliberate or a mistake. ↩

-

If you process SMF 30 you probably process 74–2 so I don’t count that as deeper. ↩

-

Which is, in my book, always a good thing. 🙂 ↩

-

I generated test cases around specific IBM products. I might well add to this list. And I just applied the code to a group of jobs beginning “NTA” which explained a CPU spike early one morning on a customer system. (Even though I could have step- and job-end SMF 30 (Subtypes 4 and 5) Interval records helped with this spike rather better.) ↩